The Original Plan

In the early days of writing songs and using AI to create the music that I envisioned for the song, I put a lot of energy into learning how to communicate my heart to AI algorithms to reproduce what I hear inside as I write my lyrics. I had no plans to release any of my “demos” for two distinct reasons.

Why Not?

I knew I needed live singers and players to fix subtle imperfections, like adding a half second pause here, taking away the pause there, going up instead of down in this place, and hold that syllable longer in that place. I was happy to hand the music to anyone who could redo for the song what AI had already done for it, performers who could hear the demo as my heart singing and respond with their own versions of the music. Perhaps that is asking a lot.

The other reason involved the open hostility that I received from performers at the very idea of AI music. In my last article, I wrote how hypocritical I find this given the fact that none of them would give any of my songs even a ten minute glance, never mind actually helping me to bring them to fruition. But still, angry statements like “NO ONE will ever accept you in the industry!!!” and throwing around words like “theft” and “fake” and “soulless” have a way of warning one off from that path.

Things Changed

But two things started happening as I pursued those live singers and players.

#1: No one will be more passionate about your project than you are. So, while I received a lot of interest because the demos are good, I haven’t found many who are enough of “self-starters” to take the project and run with it. I can’t get them to actually learn the songs, or get into our studio to record them. A lot of talk, little action. There are a couple who have taken real steps to partner with me, and I hope you will be hearing live versions from some of them sooner rather than later, but on the whole… talk talk… delay delay delay.

Being a writer, a teacher, an editor, my own social media promoter, preacher, and assistant pastor leaves me juggling too many balls to spend all my time hounding performers to take the next practical step. I am artistically inclined, but have too many years of blue collar and academic training to allow that inclination to do the driving. “Get-er-done” keeps “I’m just not feeling it right now” on a short leash.

#2: both my skills in communicating with the AI programs (I work with three in tandem) and the AI programs themselves are improving to the point that most of the subtle imperfections in my early songs are not being repeated in my more recent creations. The songs are getting good enough as demos, I believe, to perform well in the digital markets, which are the only markets in which I have an interest. I’m certainly not going to invent a weird character and go on stage and spin my songs like a DJ for a concert performance. Hmmmm… maybe I could…. No… forget that I even entertained that Idea.

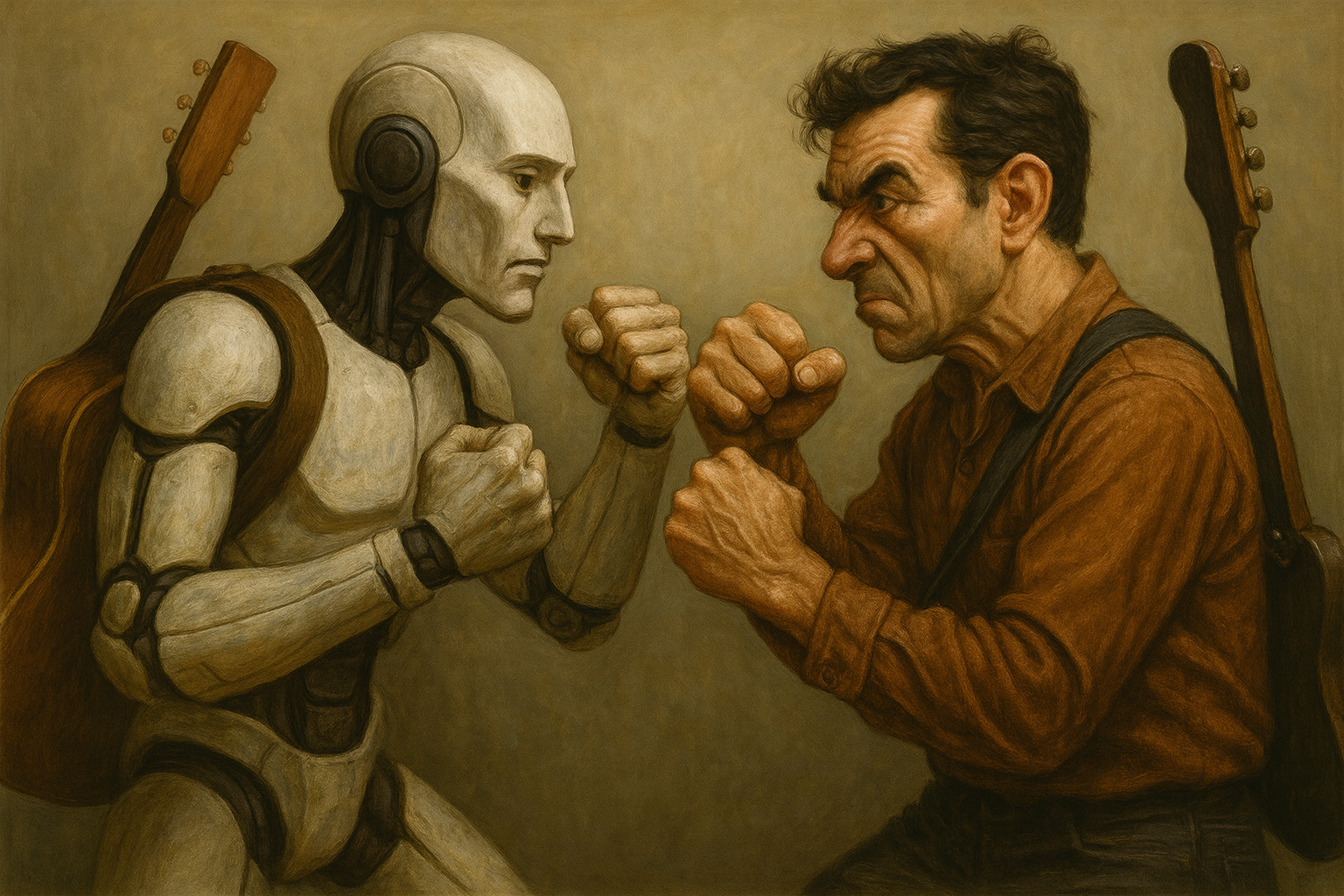

At the Crossroads (without the Devil I hope)

All these intersecting realities leave me at the crossroads. I’m at a point of decision as to how much energy I should devote to which path. Potential earnings could break the artistic stalemate with those who promise help but don’t deliver. Profit sharing is not the most alluring prospect for artsy types, but cash talks and walks. So publishing and promoting some of my best demos in digital markets could help me better fulfill my original vision. A single hit would make God Song. It could also garner the attention of successful performers who have more than likely learned the skill of follow through.

Aye There’s the Rub

So here is the rub. The offense that performing artists feel about AI music often overrides their supposed interest in producing good music.

While some of my friends fuss about authenticity in the music—I would never use AI to do my writing for me… if for no other reason than that AI does not write as well as I do—I am concerned with giving the Church biblically-rooted, theologically-sound, edifying songs to sing. If you care about ministry more than you do about scratching your ego over your personal sense of “authenticity,” I would think that you would rejoice in quality songs… and not say things like “The better your songs are the more I will hate them.”

I Get It

I do understand the concerns. I’ve drawn my own line in the sand at never allowing AI to do my thinking for me or do my writing for me. Others, however, are so fearful or indignant about the threat that AI poses to “real” artists that they would happily sacrifice quality songs to maintain a sense of control, to keep the “no talent clods” out of the markets. That makes you a gatekeeper by the way… not a good thing to be.

Déjà Vu All Over Again

The entire history of music industry growth has been a fearful lurch forward to the disgruntlement of those succeeding in the present state of things. Recordings (canned music) would destroy live performance, “the menace of Mechanical Music” (John Philips Sousa 1906). Player pianos will leave us without home learning. Amplifiers and electric guitars are inauthentic. Synthesizers make fake music. Auto-tune will destroy everything, erasing the flawed performances of real voices. DAWs and PRO TOOLS will never produce a decent record. Drummers resent drum machines. Good music still comes out.

Frankly, Red Scarlett, I Don’t Give a Drum

Frankly, the public has a different interest than artists. They want good songs to sing. They want to dance. They are far less concerned with such matters. Each generation of performers prove themselves ready to agree with the masses about past inventions, but will bandy about the same age-old complaints about the next invention.

Oh! The Hypocrisy!!!

And what do those disgusted with AI actually get up to in their performances and record making? That’s a lot more hypocrisy. I’ll make the list quick, forgive any minor errors, and forgive me for what I am about to do to dedicated readers. (If you are content with the idea, skim the next seven items and move to the end. It will be better for your mental health.)

Vocal Manipulation is Standard

1. Vocal Manipulation & Enhancement: Pitch & Timing… Auto-Tune (Antares) – automatic pitch correction; can be used subtly or as an effect. Melodyne (Celemony) – manual pitch/time correction with natural phrasing control. Waves Tune / Logic Flex Pitch / Cubase VariAudio – integrated alternatives. Elastic Audio / Revoice Pro – align multiple takes, double voices. Tone & Texture… Formant shifters – change vocal timbre without changing pitch. De-essers – tame sibilance. Vocal Rider / Leveler – automate consistent loudness. Harmonizers – generate artificial backing vocals (Eventide H3000, Soundtoys Little AlterBoy). Vocal synths – full voice modeling (iZotope VocalSynth, Emvoice One, SynthV). Performance Correction. Quantization of vocal timing – locks phrasing to tempo grid. Comping tools – splice best syllables from many takes into one seamless “performance.”

Instrumental Manipulation is Standard

2. Instrumental Manipulation: Electric & Acoustic Instruments. Amp simulators – replace real amps (Line 6 Helix, Kemper Profiler, Neural DSP). Cabinet impulse responses (IRs) – digitally model mic’d speaker cabinets. Virtual drums & drum replacement – Trigger, Superior Drummer, Addictive Drums. Drum quantization – locks rhythm to perfect time, destroying human swing. Sample libraries – orchestras, choirs, guitars rendered via MIDI rather than humans. Performance Reshaping. Groove templates – impose another player’s timing feel. Velocity humanizers – paradoxically add randomness to make MIDI sound human again.

Mixing and Mastering Automation is Standard

3. Mixing & Mastering Automation: Dynamic Control. Compressors / limiters – even out volume; often multiband or look-ahead types. Transient shapers – alter attack/decay characteristics. Side-chain compression – create rhythmic pulsing (common in EDM). EQ & Frequency Tools. Dynamic EQ – automatically adjusts tone per moment. Resonance suppressors – Soothe2, Gullfoss “listen” and self-correct frequencies. Spectral balancing AI – Neutron (iZotope), Smart:EQ (Sonible). Mastering Algorithms. AI mastering platforms – LANDR, eMastered, CloudBounce, Ozone Master Assistant. Loudness normalization & limiting – conform to streaming specs automatically.

Spatial, Time, and Texture Effects are Standard

4. Spatial, Time, and Texture Effects: Reverb / convolution reverb – simulate specific rooms or halls. Delay / echo / slapback / chorus / flanger / phaser – motion and width effects. Stereo wideners – spread mono sources artificially. 3D audio processors – binaural and ambisonic spatial filters for immersive sound. Noise gates & expanders – remove background noise dynamically.

Intelligent/AI-Assisted Systems are Standard

5. Intelligent / AI-Assisted Systems: Production & Composition. Chord and key analyzers – Scaler 2, Captain Chords, Hooktheory. Auto-arrangers – Band-in-a-Box, EZkeys, Suno “style presets.” AI drummers / bassists – Logic’s Drummer, UJAM virtual players. AI stem separation – RipX, LALAL.ai, Demucs. Mixing & Mastering. AI mix assistants – iZotope Neutron, Waves Clarity Vx, Sonible smart:comp. Reference matchers – match EQ/compression to commercial tracks. Automatic vocal isolation – Clarity, Unmix Vocal, RX Music Rebalance.

Live Performance Enhancements are Standard

6. Live Performance Enhancement: In-ear monitoring with pitch and blend control – personal vocal pitch mix. Backing tracks / click synchronization – ensures perfect timing with pre-recorded parts. Vocal processors (TC-Helicon, Boss VE series) – live harmony, reverb, tuning in real time. MIDI controllers & loopers – trigger pre-arranged patterns mid-show. Lighting/audio automation – DMX linked to DAW tempo.

Visual and Streaming Processing is Standard

7. Visual and Streaming Processing: Lip-sync correction & video alignment tools (e.g., in DaVinci Resolve, Premiere). Audio restoration suites – RX, Clarity, removing breaths, pops, and plosives. Noise gating in streaming software – OBS filters, Nvidia Broadcast AI background noise removal.

I Need an Aspirin

I’m dizzy just putting all that down in print.

And my point? “Old men like me don’t bother with making points. There’s no point.” (Matrix Reloaded quote).

My point is this. Let’s all take a breath. Celebrate good songs. Help each other rather than tear each other down. The most important thing is that the church is blessed with good songs that teach, encourage, comfort, and disciple.

I’m a writer. I write as easily as I breathe. I don’t begrudge people wanting to write. Nor do I begrudge people getting help from what tools are available so long as the ideas are theirs, the thinking theirs. If running your writing through AI helps you write what you want to say better, more power to you.

And I say that as a guy who has given multiplied thousands of hours helping other people accomplish their writing goals. And all I wanted when I started with song writing was the same kind of help from those who do that well.

If you are interested in partnering with me, singing my songs, helping me make live versions to publish. Let me hear from you. Maybe we can work something out.

~Andrew D. Sargent, PhD

Discover more from Biblical Literacy with Dr. Andrew D. Sargent

Subscribe to get the latest posts sent to your email.